Updated Evaluation Framework published

About the author

Richard Bailey Hon FCIPR is editor of PR Academy's PR Place Insights. He teaches and assesses undergraduate, postgraduate and professional students.

The UK Government Communication Service has published at updated version of its evaluation framework to help communicators across the wider public sector to better measure the success of their work and appraise their activities.

Does this mean that those in the private sector can ignore the lessons from this guide? Surely, their work is already more targeted and results-oriented?

It’s the pursuit of quantifiable results that has been the main driver of false measures such as the discredited advertising value equivalent (AVE), and as speakers at the AMEC conference argued, the goal of ‘PR attribution’ is problematic. If everything has to be geared to the bottom line, how do you evaluate CSR and philanthropy programmes? How do you isolate out the effects of PR from other marketing communication activity?

Government comms looks differently at the problem: the focus is on using communication to affect behaviour change (in other words, on outcomes, not outputs).

As executive director of government communications Alex Aiken writes:

‘Running a successful campaign requires clear objectives, underpinned by a theory of behaviour change that understands how communication activity will be effective.’

So evaluation is not an isolated activity, but part of campaign planning. Here, the GCS uses the OASIS campaign planning guide:

- Objectives: These should be C-SMART (Challenging, Specific, Measurable, Attainable, Relevant and Time-bound)

- Audience Insights: How to reach key groups through eg gatekeepers and influencers

- Strategy: What choices are you making, and why?

- Implementation: Focus on CARE (Content, Amplifying message, Reasons to share, Emotional appeal)

- Scoring (evaluation): This should focus primarily on outcomes and outtakes.

Examples are given of typical metrics across inputs, outputs, outtakes, outcomes across the three types of public sector campaign activity:

- Behaviour change: ‘The vast majority of government communication seeks to change behaviours in order to implement government policy or improve society.’

- Recruitment: ‘Government invests a lot of money in recruiting people for important jobs (teachers, armed forces, nurses etc).’

- Awareness: ‘Some campaigns solely seek to raise awareness of an issue to to change people’s attitude.’

Then there’s a framework for calculating return on investment (ROI). This involves five steps, and requires assumptions to be clearly identified:

- Objectives: These should be focused on quantifiable behavioural outcomes

- Baseline: What would happen if no communication activity was undertaken?

- Trend: What are the forecasts, based on baseline trends?

- Isolation: Exclude or disaggregate other factors that will affect the outcome to make sure the change observed has been caused by the campaign

- Externalities: Account for any consequential or collateral effects of your campaign, positive and negative.

Measuring intangible assets

What about intangibles – such as measuring reputation? ‘There is still much confusion about how to best measure and manage reputation.’ But ‘measuring reputation is a good form of organisational listening.’

The framework suggests three questions:

- Reputation with whom? Which stakeholders are most critical?

- Reputation for what? Measuring the factors that causally linking them to reputation is important in helping to deliver a theory of behaviour change.

- Reputation for what purpose? What stakeholder behaviour or attitude are you seeking to maintain or change?

Ethical use of data

The GCS has produced a Data Ethics Framework to ensure the appropriate use of data in government and the wider public sector. The key steps are:

- Start with a clear user need

- Be aware of relevant legislation and codes of practice

- Use data that is proportionate to the user need

- Understand the limitations of the data

- Use robust practices and work within your sekillset

- Make your work transparent and be accountable

- Embed data responsibly

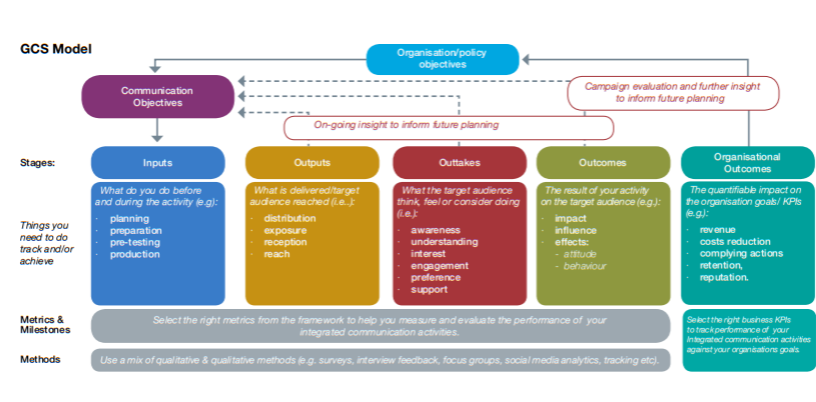

GCS model

The last page of the framework presents the GCS model for evaluation. It’s a very clear summary of the steps you need to take to prove the value of any public relations and communication activity and most will turn straight to this page, and return to it frequently.

It may be that you have your own model that’s fit for purpose, but I suspect that the focus on behaviour change in government comms means that this is a more sophisticated approach than you’ve adopted to date.

PR Place reported on the AMEC Summit held in Barcelona on June 12-14.

- 2010: A story of evolution and survival

- Evaluation: from media to management

- AMEC day two: a question of integration

PR Academy offers the AMEC International Certificate in Measurement and Evaluation.